From Rushes to Broadcast: Automating News Video Workflows

In broadcast news, time is the scarcest resource. A breaking story can go from first report to scheduled airtime in under an hour, and the video workflow that transforms raw field footage into a polished news segment must keep pace. Every minute saved in logging, editing, review, and delivery is a minute that can be redirected to journalism.

Yet many newsrooms still rely on workflows built around manual processes: producers watching raw footage in real time to log it, editors searching through hours of material to find the right shot, and multiple rounds of review that depend on people being physically available. These bottlenecks are not just inefficient; they directly limit a newsroom's capacity to cover stories.

AI-powered automation is changing this equation. Not by replacing journalists and editors, but by accelerating the mechanical steps that consume time without adding editorial value. This article walks through each stage of the broadcast news video workflow and examines how AI can transform it.

Stage 1: Ingest

The Traditional Process

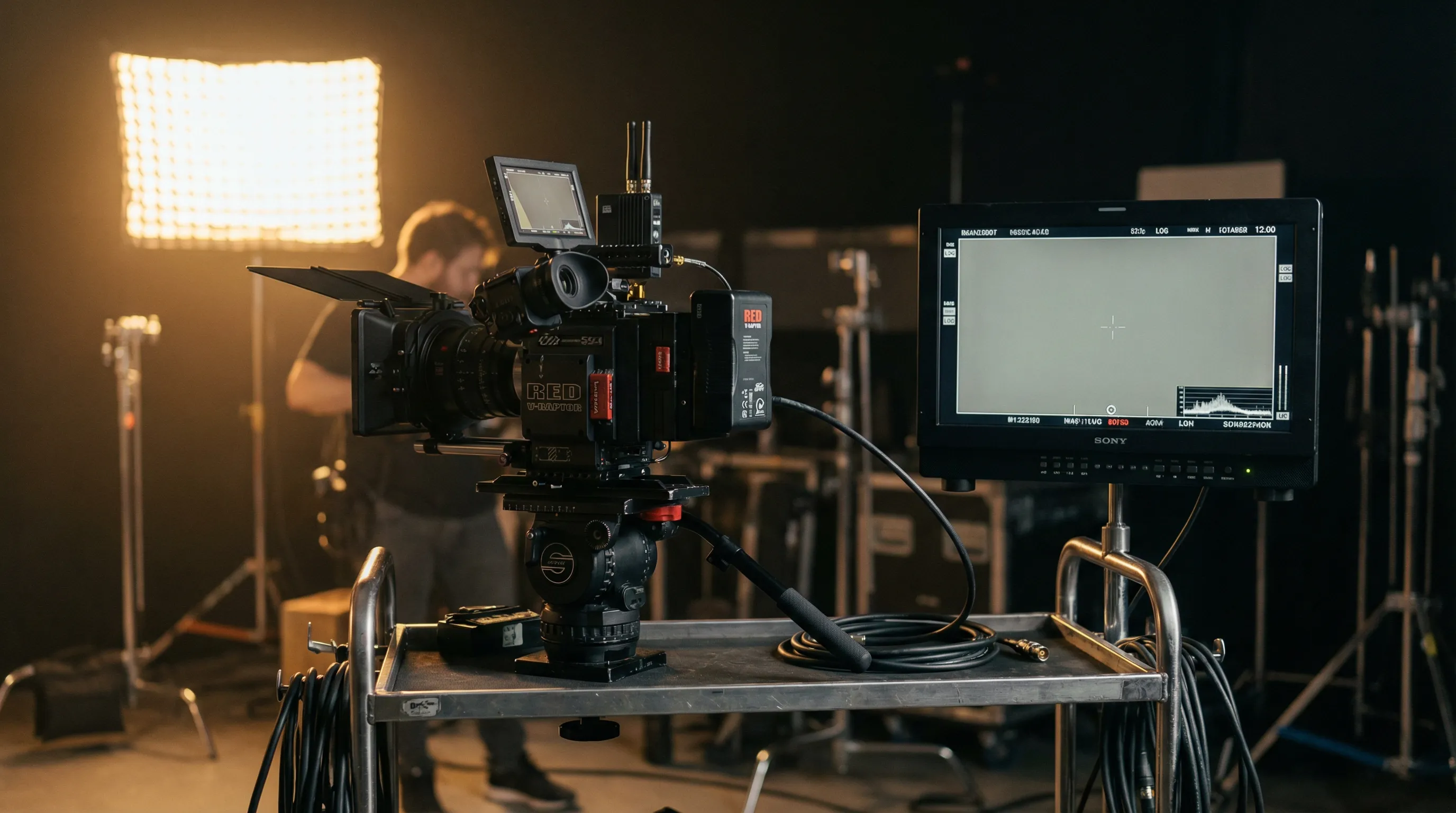

Raw footage arrives in the newsroom from multiple sources: camera crews in the field, satellite feeds, agency wire services, social media, and increasingly, drones and body cameras. Each source may use different formats, codecs, and resolutions. The ingest process involves receiving this material, converting it to the newsroom's standard format, and registering it in the media asset management (MAM) system.

In many newsrooms, ingest is a semi-manual process. An operator monitors incoming feeds, starts and stops recordings, labels clips with basic metadata (source, date, story slug), and routes material to the appropriate storage.

How AI Accelerates It

Automated ingest systems can monitor all incoming sources continuously, detecting when new material arrives and processing it without operator intervention. AI adds an immediate layer of intelligence:

- Automatic format detection and transcoding: The system identifies the incoming format and converts it to the newsroom standard without manual configuration.

- Duplicate detection: AI identifies when the same footage arrives from multiple sources, preventing redundant storage and confusion.

- Initial content analysis: Even during ingest, AI can begin analyzing the content, detecting faces, identifying locations from visual landmarks, and flagging potentially sensitive material (graphic content, identifiable minors).

The result is that footage arrives in the MAM system faster, cleaner, and with a richer initial metadata layer than manual ingest can provide.

Stage 2: Logging and Cataloging

The Traditional Process

Logging is one of the most time-consuming steps in the news workflow. A producer or logger watches raw footage, often in real time, noting what each segment contains: who is speaking, what is being shown, which sound bites are usable, and where the strongest visual material is. For a 30-minute tape of raw rushes, this can take 30 to 60 minutes of focused attention.

In a busy newsroom handling dozens of incoming feeds daily, logging creates a significant bottleneck. Unlogged footage is effectively invisible; editors cannot use what they cannot find.

How AI Accelerates It

AI-powered logging transforms this bottleneck into a near-instant process:

- Automatic transcription: Speech recognition generates a complete transcript of all audio within minutes of ingest, regardless of the footage length. Producers can read a transcript in a fraction of the time it takes to watch footage.

- Speaker identification: AI identifies and labels different speakers throughout the footage, making it easy to locate specific interviews or sound bites.

- Scene detection and description: Computer vision analyzes the visual content, detecting scene changes, identifying settings (press conference, street scene, aerial shot), and describing key visual elements.

- Named entity recognition: AI identifies names of people, organizations, and places mentioned in speech, automatically tagging the footage with relevant entities.

- Sentiment and tone analysis: The system can flag emotionally charged segments, identifying powerful sound bites or contentious exchanges that editors are likely to seek.

With AI logging, a 30-minute block of raw rushes can be fully indexed and searchable within minutes of arriving in the newsroom, rather than after an hour of manual review.

Stage 3: Story Research and Clip Selection

The Traditional Process

When a journalist or producer begins assembling a story, they need to find relevant footage across the newsroom's entire archive. This typically involves searching the MAM system using keywords, browsing recent ingest logs, and asking colleagues whether they remember seeing particular material.

For breaking stories that draw on archived footage (background on a recurring issue, historical context, file footage of key individuals), finding the right clips can take considerable time, particularly if the archive is large and inconsistently cataloged.

How AI Accelerates It

AI-powered semantic search allows journalists to find footage based on meaning rather than exact keyword matches:

- Natural language queries: A journalist can search for "mayor speaking about housing policy" and find relevant clips even if the original metadata uses different terminology.

- Visual search: Searching for "aerial footage of the river district" surfaces matching shots based on visual content analysis, not just text tags.

- Related content suggestions: When a journalist pulls one clip, the system can suggest related footage from the archive, surfacing material that might otherwise be overlooked.

- Cross-referencing: AI can connect footage to running stories, beat assignments, and editorial calendars, proactively suggesting relevant archive material when a new story is being planned.

WIKIO AI's semantic search capability is particularly relevant here, enabling newsrooms to treat their entire archive as a searchable, intelligent resource rather than a static storage system.

Stage 4: Rough Cut Assembly

The Traditional Process

Editors assemble rough cuts by selecting clips, arranging them on a timeline, and cutting them together to create a coherent narrative. For a standard news package (1.5 to 3 minutes), this process typically takes 30 minutes to several hours, depending on the complexity of the story and the volume of source material.

Much of the editor's time is spent on mechanical tasks: scrubbing through footage to find the right in and out points, arranging clips in sequence, and adjusting timing.

How AI Accelerates It

AI assists the rough cut process in several ways:

- Transcript-based editing: Instead of scrubbing through video, editors can work from the AI-generated transcript, selecting sound bites by highlighting text. The system automatically locates the corresponding video segments.

- AI-suggested sequences: Based on the selected clips and the story angle, AI can suggest an initial arrangement that follows common news package structures (establishing shot, sound bite, B-roll sequence, stand-up, closing).

- Automatic B-roll matching: When the editor selects a sound bite about a specific topic, AI can suggest visually relevant B-roll from the available footage, reducing the time spent searching for coverage shots.

- Jump cut detection: AI identifies potential jump cuts and other visual discontinuities in the assembly, flagging issues before they reach the review stage.

These tools do not replace editorial judgment. The journalist still decides what the story is, which angles to emphasize, and how to structure the narrative. AI simply accelerates the mechanical work of finding, selecting, and arranging the raw material.

Stage 5: Editorial Review

The Traditional Process

Completed packages go through one or more rounds of editorial review. A senior editor or producer watches the piece, provides feedback on content, accuracy, balance, and presentation, and sends it back for revisions if needed. In a fast-moving newsroom, getting the right person to review a piece at the right time can be a scheduling challenge.

How AI Accelerates It

AI supports the review process by providing automated checks that catch common issues before human review:

- Fact-checking assistance: AI can cross-reference claims made in the package against known data sources and flag potential inaccuracies for human verification.

- Compliance checking: Automated detection of content that may require legal review, such as identifiable minors, potentially defamatory statements, or footage from restricted sources.

- Bias and balance analysis: AI can analyze the distribution of perspectives in a piece, alerting editors if a story appears to give disproportionate weight to one side.

- Technical quality checks: Automated verification of audio levels, color consistency, and resolution standards before the piece proceeds to graphics and finishing.

These AI-assisted checks do not replace editorial oversight. They augment it by ensuring that human reviewers can focus on the substantive editorial questions rather than catching technical or procedural issues.

Stage 6: Graphics and Lower Thirds

The Traditional Process

After editorial approval, the graphics department adds name supers (lower thirds), location identifiers, data visualizations, maps, and other visual elements. This requires coordination between the graphics team and the producing team, with potential for errors in names, titles, and data.

How AI Accelerates It

AI can automate much of the graphics workflow:

- Automatic name super generation: Using speaker identification and entity recognition from the logging stage, AI can pre-populate lower third templates with the correct names and titles, ready for human verification.

- Data visualization: For stories involving statistics, AI can generate chart and graph templates from structured data, reducing the time graphics artists spend on routine visualizations.

- Template matching: AI can suggest appropriate graphic templates based on story type, maintaining visual consistency across the newscast.

Stage 7: Final Approval and Playout

The Traditional Process

The final package goes through a last technical and editorial check before being loaded into the playout system for broadcast. This includes verifying that the piece meets technical broadcast standards (loudness levels, safe area compliance, closed caption accuracy) and confirming that all required elements are present.

How AI Accelerates It

AI-powered quality assurance can verify broadcast compliance automatically:

- Loudness normalization: Automated adjustment to meet EBU R128 or other applicable loudness standards.

- Closed caption verification: AI-generated captions are checked against the final audio for accuracy and timing.

- Content standards compliance: Final automated check for any content that may have been introduced during editing that requires additional review.

The Compound Effect

The real power of AI in news workflows is not in any single step but in the compound effect of accelerating every step. If each of the seven stages saves 20% to 50% of the time previously required, the total workflow acceleration is dramatic. A package that previously took four hours from ingest to playout might be completed in two. A newsroom that could produce 15 packages per day might produce 25 with the same staff.

This is not about doing less journalism. It is about doing more. Journalists spend less time on mechanical tasks and more time on reporting, interviewing, and analysis. Editors focus on storytelling rather than file management. Producers oversee more stories without sacrificing quality.

For broadcast news organizations operating under relentless deadline pressure, AI-powered workflow automation is not a luxury. It is a competitive necessity. Platforms like WIKIO AI provide the integrated infrastructure that makes this acceleration possible, connecting every step from ingest to delivery in a single, AI-enhanced pipeline.

The newsrooms that embrace these tools will break more stories, provide deeper coverage, and serve their audiences better. Those that do not will find themselves outpaced by competitors who have figured out how to do more with the same resources and the same unforgiving deadlines.