AI Dubbing with Lip-Sync: From One Language to Fifty

Video is the dominant format for communication across every industry. Training programs, product launches, executive messages, marketing campaigns, customer education, all of it runs on video. But here is the challenge that stops most organizations from reaching their full global audience: language.

Producing a single video is already a significant investment. Producing that same video in fifty languages using traditional methods, hiring voice actors, booking studios, coordinating scripts, and editing each version, multiplies the cost and timeline by orders of magnitude. For most teams, the math simply does not work. So content stays in one or two languages, and the rest of the audience is left out.

AI dubbing with lip-sync technology changes this equation entirely.

How Traditional Dubbing Works (and Why It Does Not Scale)

Traditional dubbing is a craft that has served the film and television industry for decades. It involves several labor-intensive steps:

- Script translation: A translator adapts the script into the target language, adjusting for cultural nuances and lip-sync timing.

- Casting: Voice actors are selected to match the tone and character of the original speakers.

- Recording: Each actor records their lines in a professional studio, often requiring multiple takes to match the pacing of the original.

- Mixing: Audio engineers blend the new voice tracks with the original background audio and sound effects.

- Quality review: The final product is reviewed for synchronization, naturalness, and accuracy.

For a single ten-minute corporate video, this process can take two to four weeks per language and cost thousands of euros. Multiply that by fifty languages, and you are looking at months of production time and a six-figure budget.

This is why the vast majority of corporate video content exists in only one language. It is not a strategic choice, it is a resource constraint.

What AI Dubbing Brings to the Table

AI dubbing automates the translation, voice synthesis, and audio mixing steps using machine learning models that have been trained on vast amounts of multilingual speech data. The process works as follows:

Automatic Transcription and Translation

The AI first transcribes the original audio with high accuracy, then translates the transcript into the target language. Modern neural machine translation models handle not just word-for-word conversion but contextual adaptation, preserving the intent and tone of the original message.

Voice Cloning and Synthesis

Rather than using generic text-to-speech voices, advanced AI dubbing systems clone the original speaker's voice characteristics, pitch, cadence, tone, and emotional inflection, and reproduce them in the target language. The result is a dubbed version that sounds like the same person speaking a different language, not a robotic replacement.

Lip-Sync Adjustment

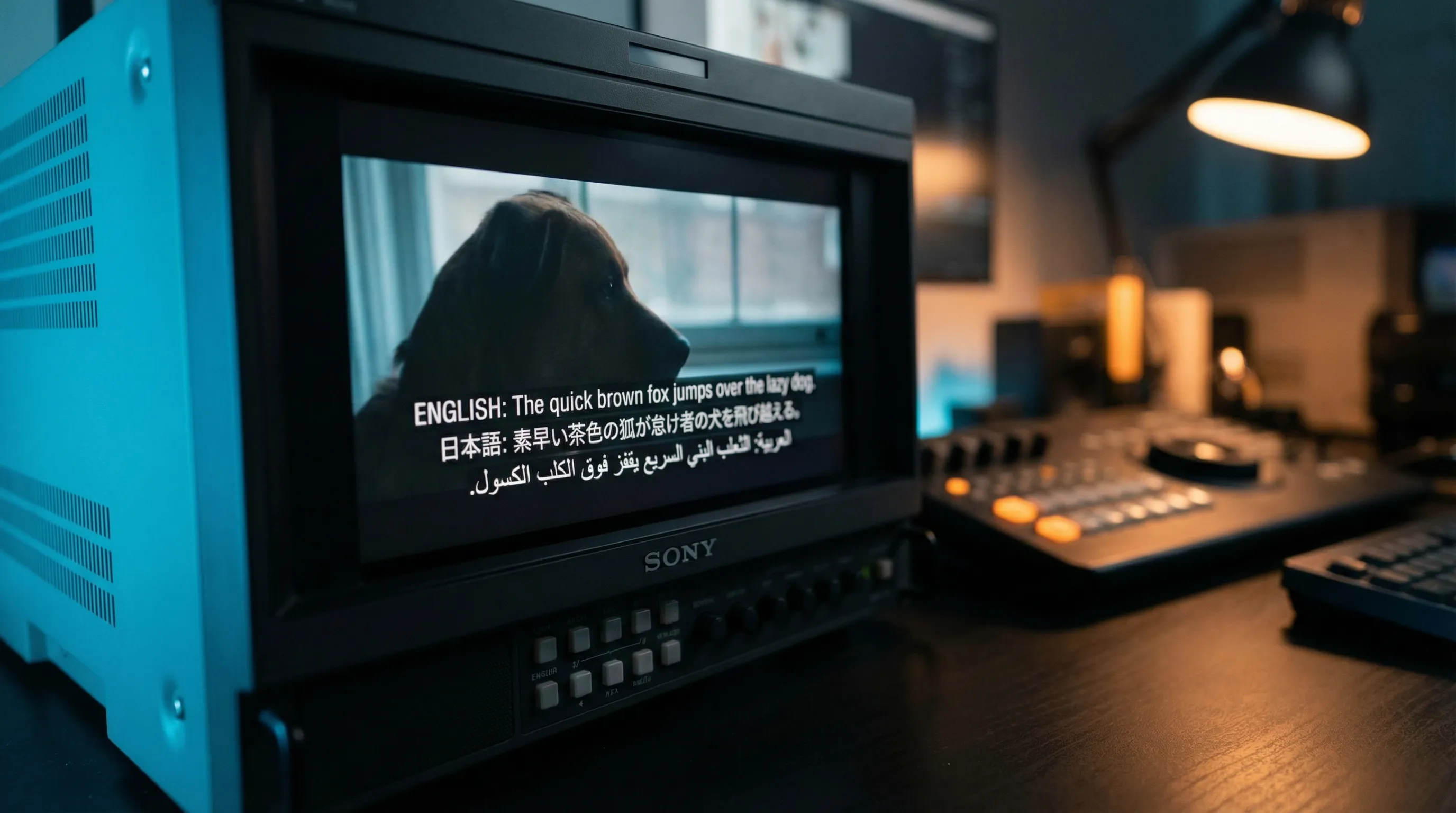

This is where the technology becomes truly remarkable. Standard dubbing, even with human voice actors, often produces a noticeable mismatch between mouth movements and audio. The speaker's lips clearly form sounds that do not match what the audience hears.

AI lip-sync technology addresses this by subtly adjusting the visual representation of the speaker's mouth to match the new audio track. Using generative models trained on facial dynamics, the system modifies lip movements frame by frame so they correspond naturally to the dubbed speech. The result is a video that looks and feels native to each language.

The Quality Question

The first reaction most people have to AI dubbing is skepticism about quality. And five years ago, that skepticism was entirely justified. Early text-to-speech systems sounded robotic, translations were literal and awkward, and lip-sync was nonexistent.

The current generation of AI dubbing technology is fundamentally different. Several advances have converged to make high-quality automated dubbing a reality:

- Large language models produce translations that read naturally in the target language, accounting for idiomatic expressions and cultural context.

- Neural voice synthesis has reached a point where cloned voices are nearly indistinguishable from real recordings in blind tests.

- Generative video models can modify facial movements with enough precision that viewers do not notice the adjustments.

This does not mean AI dubbing is perfect for every use case. A feature film destined for theatrical release will still benefit from the artistry of human voice actors. But for corporate communications, training videos, product tutorials, marketing content, and internal messaging, AI dubbing delivers quality that meets or exceeds audience expectations.

Real-World Applications

Global Corporate Communications

When a CEO records a company-wide address, AI dubbing can deliver that message in every language spoken across the organization within hours. Every employee hears the message from what appears to be the same person, in their native language. This is not a future scenario, organizations using WIKIO AI are doing this today.

Multilingual Training Programs

Learning and development teams invest heavily in training video content. AI dubbing means a training program created in English can be deployed simultaneously in French, German, Spanish, Mandarin, Japanese, Portuguese, Arabic, and dozens of other languages. Employees learn more effectively in their native language, and the organization avoids the cost of producing separate versions.

International Marketing

Marketing teams can test campaigns across markets without the upfront investment of producing localized versions. A product video dubbed into fifteen languages can be deployed to assess regional interest before committing to full localized production.

Customer Support and Education

Product tutorials, onboarding videos, and support content can reach a global customer base without language barriers. Customers get a better experience, and support teams handle fewer basic questions.

The WIKIO AI Approach

WIKIO AI integrates AI dubbing with lip-sync directly into the video management workflow. When a video is uploaded, generating dubbed versions is as simple as selecting the target languages. The platform handles transcription, translation, voice cloning, lip-sync adjustment, and delivery automatically.

Several design decisions make this implementation particularly effective:

- Speaker detection: The system identifies individual speakers in multi-person videos and applies the correct cloned voice to each one.

- Glossary support: Organizations can define terminology that should be preserved or translated in specific ways, ensuring brand names, product names, and technical terms are handled correctly.

- Human review workflow: While the AI produces the initial dubbing, teams can review and adjust translations before publishing, maintaining full control over the final output.

- EU-hosted processing: All audio and video processing happens on European infrastructure, ensuring that sensitive corporate content never leaves EU jurisdiction. This is critical for organizations subject to GDPR and data sovereignty requirements.

The Economics of Scale

The cost structure of AI dubbing is fundamentally different from traditional dubbing. Traditional dubbing has a roughly linear cost: each additional language costs almost as much as the first. AI dubbing has a high fixed cost (building the models and infrastructure) and a near-zero marginal cost per additional language.

For organizations, this means the decision shifts from "which two or three languages can we afford?" to "why would we not make this available in every language?" When the marginal cost of adding a language is negligible, the rational choice is to maximize reach.

This shift has profound implications for content equity within global organizations. Employees and customers who previously received information late, in poor translations, or not at all can now access the same quality of video content as those in the headquarters' primary language.

Looking Ahead

AI dubbing technology is improving rapidly. Voice quality, translation accuracy, and lip-sync precision all continue to advance with each model generation. Within the next few years, the distinction between AI-dubbed and natively recorded content will become imperceptible for most applications.

Organizations that adopt AI dubbing now gain an immediate competitive advantage in reaching global audiences. Those that wait will find themselves producing content in one language while their competitors communicate in fifty.

The infrastructure to make this transition is available today. WIKIO AI provides the complete pipeline from upload to multilingual delivery, making it possible to turn every video into a global asset.