How Semantic Video Search Works (and Why Keywords Aren't Enough)

If you have ever typed a keyword into your company's video library and received hundreds of irrelevant results, or worse, zero results for footage you know exists, you are not alone. The problem is not the size of your library. The problem is the search technology behind it.

Traditional video search relies on metadata that humans manually attach to files: titles, tags, descriptions, and folder names. This approach worked when organizations managed dozens of videos. It collapses when libraries grow to thousands or tens of thousands of assets. The gap between what people remember about a video and the exact words someone used to tag it months earlier is simply too wide.

Semantic video search closes that gap entirely.

The Limits of Keyword-Based Video Search

Keyword search operates on exact matching. When you search for "product demo," the system looks for those precise characters in the metadata fields. It has no understanding of what a product demo actually is. It cannot infer that a file tagged "Q3 walkthrough" or "feature showcase" might be exactly what you need.

This creates several real-world problems for teams:

- Inconsistent tagging: Different team members tag the same type of content differently. One person writes "interview," another writes "testimonial," a third writes "customer story." Keyword search treats these as entirely unrelated.

- Incomplete metadata: Under time pressure, people skip tagging entirely. A video with no tags becomes invisible to keyword search, no matter how valuable the content.

- No visual understanding: Keywords cannot describe what actually happens inside the video. If you need the shot where a specific product appears on screen, keyword search cannot help unless someone manually noted that detail.

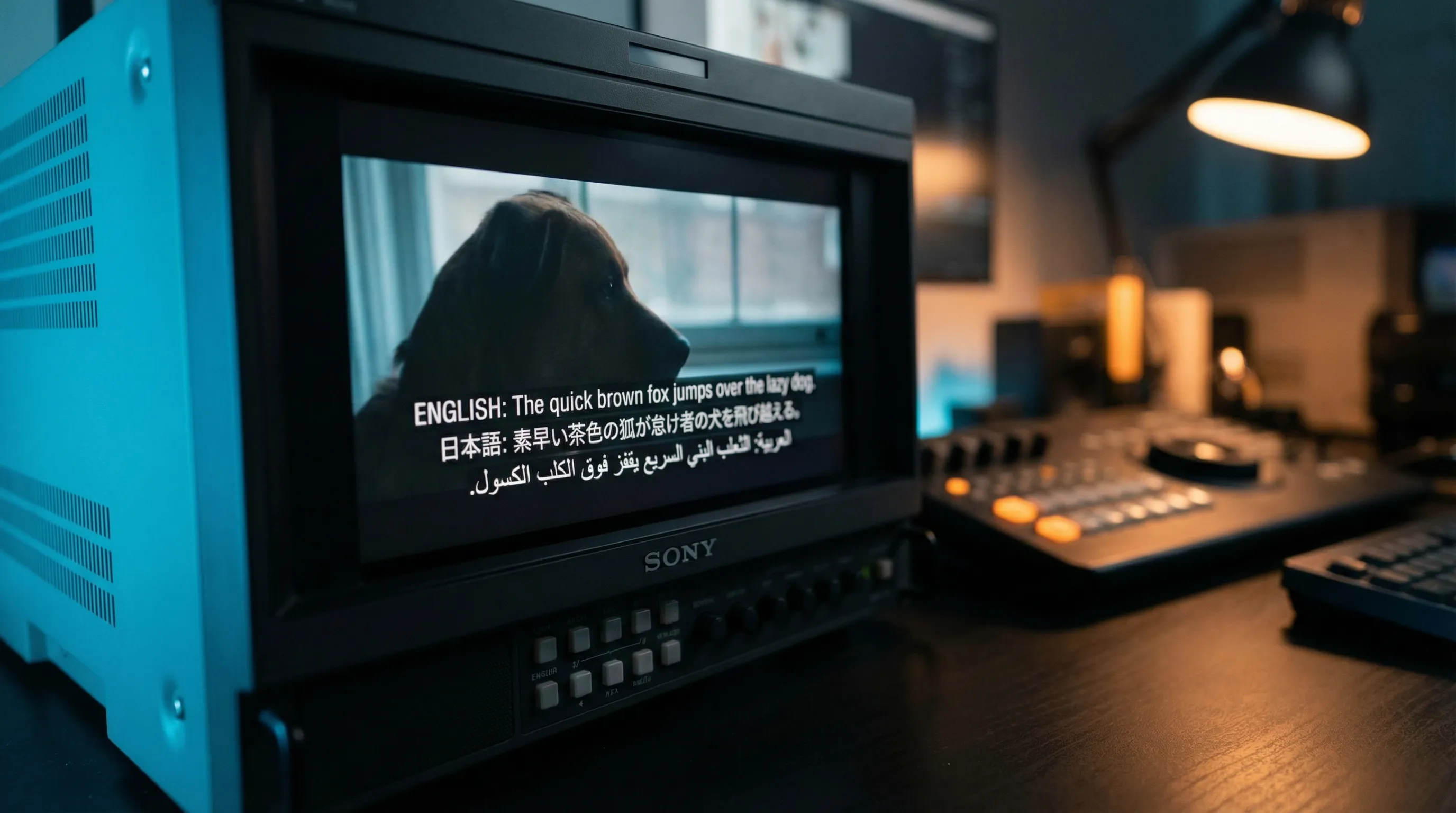

- Language barriers: Global teams produce content in multiple languages. Keyword search in English will never surface a perfectly relevant video whose metadata was written in French or German.

The result is a paradox: the more video content your organization creates, the harder it becomes to find anything.

What Semantic Search Actually Does

Semantic search replaces exact string matching with meaning-based understanding. Instead of asking "do these characters match?", semantic search asks "does this content relate to what the user is looking for?"

The technology behind this involves several AI layers working together:

1. Automatic Speech Recognition and Transcription

Every spoken word in a video is transcribed automatically using advanced speech-to-text models. This creates a complete textual record of the audio content without any manual effort. At WIKIO AI, transcription runs the moment a video is uploaded, supporting over fifty languages out of the box.

2. Visual Scene Understanding

Computer vision models analyze the video frame by frame, identifying objects, actions, text on screen, environments, and even facial expressions. This means the system knows that a particular segment shows a whiteboard session, an outdoor location, or a specific product, without anyone having to describe it.

3. Embedding and Vector Representation

Here is where the "semantic" part truly happens. The transcribed text, visual descriptions, and existing metadata are all converted into mathematical representations called embeddings. These embeddings capture meaning in a high-dimensional space where conceptually similar content clusters together.

In this space, "product demo," "feature walkthrough," and "showing the new tool" all land near each other, because they share semantic meaning even though they share almost no words.

4. Query Understanding

When you type a search query, it goes through the same embedding process. The system then finds the videos (and specific moments within videos) whose embeddings are closest to your query's embedding. You can search for "the part where Sarah explains the pricing model" and the system understands each component of that query: the speaker, the action, and the topic.

Why This Matters for Video Teams

The practical impact of semantic video search is measured in hours saved per week. Consider these scenarios:

A marketing manager needs b-roll of a cityscape at sunset. With keyword search, this requires someone to have tagged a video with those exact terms. With semantic search, the system's visual understanding can surface every clip containing urban skylines in golden-hour lighting, even from videos primarily about something else entirely.

A compliance officer needs to find every instance where a specific claim was made across all company videos. With keyword search, this is essentially impossible without watching every video. With semantic search, a natural-language query returns timestamped results across the entire library.

A global team in Tokyo needs footage from a London workshop. The workshop was recorded and tagged in English. With WIKIO AI's semantic search, the Tokyo team can search in Japanese and still find the relevant English-language content, because meaning transcends language at the embedding level.

The Architecture Behind Fast Results

One common concern is speed. If the system needs to compare a query against every moment of every video in a large library, how can results appear in under a second?

The answer lies in vector indexing. Modern semantic search engines use approximate nearest neighbor (ANN) algorithms that organize embeddings into efficiently searchable structures. Rather than comparing against every single vector, the system navigates a pre-built index to find the closest matches in milliseconds.

WIKIO AI's search infrastructure is built on this principle. Videos are indexed at upload time, so the computational cost is paid once. Every subsequent search is fast regardless of library size. Whether you have five hundred videos or fifty thousand, the search response time remains consistently under one second.

Beyond Search: Discovery

Perhaps the most transformative aspect of semantic video search is that it enables discovery, not just retrieval. Traditional search requires you to know what you are looking for. Semantic search lets you explore.

You can ask open-ended questions: "What have we said about sustainability?" or "Show me all customer-facing presentations from the last quarter." The system surfaces relevant content you may have forgotten existed, turning a passive archive into an active knowledge base.

For organizations investing heavily in video content, this shift from retrieval to discovery represents an entirely new way of leveraging existing assets. Content that was filmed once and forgotten can resurface exactly when it becomes relevant again.

Getting Started

The transition from keyword search to semantic search does not require re-tagging your entire library. Because semantic search derives understanding directly from the audio and visual content, it works on day one with your existing assets.

WIKIO AI processes your video library automatically upon upload, building semantic indices that make every second of every video instantly searchable. There is no manual setup, no tagging taxonomy to design, and no training period.

The era of searching for videos by guessing which keywords someone used to tag them is over. Semantic search finds what you mean, not just what you type.