Why Your Video DAM Needs to Be AI-Native

The term "Digital Asset Management" has been around for over two decades. DAM platforms were originally built to organize photographs, design files, brand assets, and documents. They did this well. Folder structures, metadata schemas, taxonomy trees, and permission models gave marketing teams and creative departments a structured way to store, find, and distribute their digital assets.

Then video happened.

Over the past decade, video has gone from a specialized content type produced by dedicated teams to the default communication medium across entire organizations. Marketing, sales, HR, training, product, support, and executive communications all run on video. The volume of video content inside organizations has grown exponentially, and the trend is only accelerating.

Most organizations responded by trying to fit video into their existing DAM systems. It has not worked well.

Where Traditional DAMs Break Down with Video

The fundamental problem is that traditional DAMs treat video as a file, when in reality video is a complex, time-based medium containing layers of information: speech, visuals, text, music, and context that unfold over minutes or hours.

The Metadata Problem

A traditional DAM requires humans to create and maintain metadata. For an image, this is manageable: a photographer can tag a photo with relevant keywords in thirty seconds. For a sixty-minute video, comprehensive manual tagging would require someone to watch the entire video, note every topic discussed, every person who appears, every product shown, and every location depicted. This takes longer than the video itself.

The result is that video metadata in traditional DAMs is sparse and inconsistent. Files get a title, maybe a description, and a handful of tags. The rich content inside the video remains invisible to the system.

The Search Problem

Because metadata is sparse, search is ineffective. Users cannot find the specific moment in a video where a particular topic is discussed. They cannot search across spoken content. They cannot find visually similar clips. They are limited to whatever someone thought to write in the description field, which is almost never enough.

The Preview Problem

When browsing a traditional DAM, users see a thumbnail and a filename. To determine whether a video is relevant, they must open it and scrub through the timeline. For a library of thousands of videos, this browse-and-scrub approach is simply not viable. People give up and reshoot content that already exists somewhere in the library.

The Processing Problem

Video requires processing that traditional DAMs were never designed to handle: transcoding to multiple formats, generating adaptive bitrate streams, creating transcripts, extracting key frames, and more. Many DAMs rely on external services or manual workflows for these tasks, creating fragmented and error-prone processes.

What "AI-Native" Actually Means

The term "AI-native" is used frequently in marketing, so it is worth defining precisely. An AI-native platform is one where artificial intelligence is not a feature bolted onto an existing architecture but the foundational design principle that shapes the entire system.

For a video DAM, being AI-native means:

Automatic Content Understanding

Every video uploaded to the platform is automatically analyzed in depth. Speech is transcribed. Speakers are identified. Visual content is recognized and described. Topics are extracted. Chapters are generated. Sentiment is detected. All of this happens without any human intervention, creating a rich content index that makes every second of every video searchable and browsable.

WIKIO AI performs this analysis at the moment of upload. By the time a user navigates to a newly uploaded video, it already has a complete transcript, identified speakers, visual descriptions, and AI-generated chapters. There is no waiting period and no manual enrichment required.

Semantic Intelligence

An AI-native DAM does not just store metadata, it understands meaning. When a user searches for "quarterly revenue discussion," the system finds segments where revenue is discussed in a quarterly context, even if those exact words were never spoken. It understands synonyms, related concepts, and contextual relationships.

This goes far beyond what any manual tagging system could achieve, because the AI processes every word spoken and every frame shown, while human taggers can only capture a fraction of the content.

Workflow Automation

AI-native platforms automate workflows that traditionally required manual effort:

- Auto-tagging: The system generates relevant tags based on actual content analysis, not human guesswork.

- Smart collections: Videos are automatically grouped by topic, project, speaker, or any other dimension the system identifies.

- Compliance flagging: Content that may contain sensitive information, outdated claims, or brand violations is flagged automatically.

- Highlight generation: Key moments are identified and extracted as shareable clips without manual editing.

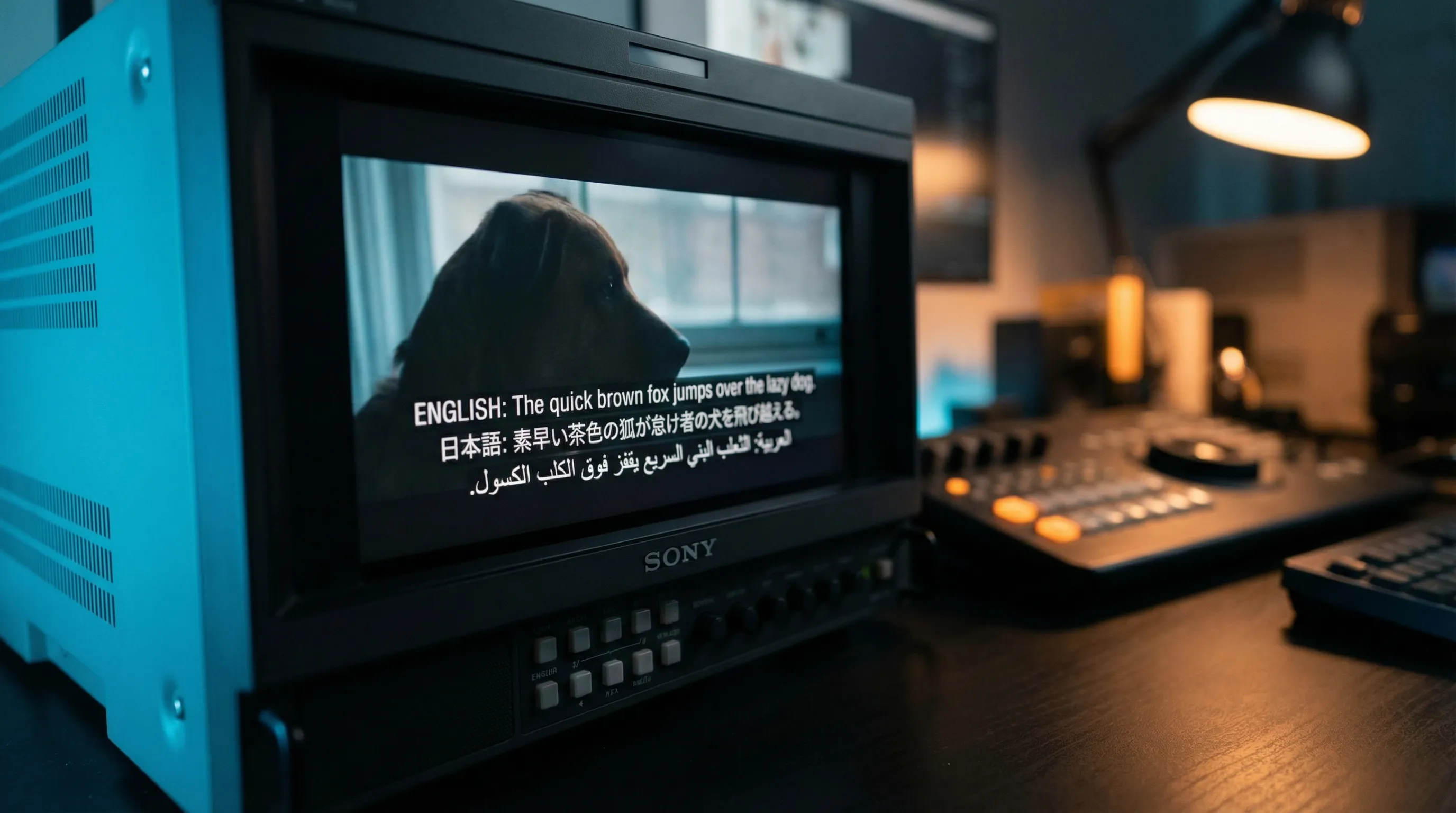

- Multilingual access: Transcripts and subtitles are generated in multiple languages, making content accessible across language barriers.

Adaptive Organization

Traditional DAMs require administrators to design and maintain folder hierarchies and taxonomy structures. An AI-native DAM organizes content dynamically based on actual content characteristics, adapting as the library grows and evolves.

This means no more arguments about folder structure. No more orphaned files in miscategorized directories. No more content that becomes unfindable because someone put it in the wrong folder three years ago.

The Cost of Retrofitting AI onto Legacy DAMs

Some traditional DAM vendors are responding to market pressure by adding AI features to their existing platforms. While this is better than nothing, the bolt-on approach has inherent limitations:

Architecture constraints: Legacy DAMs were built around file-and-folder paradigms with relational databases. Retrofitting semantic search, vector embeddings, and real-time AI processing onto this architecture requires compromises that limit performance and capability.

Data model limitations: Traditional DAMs store metadata as discrete fields (title, description, tags). AI-native platforms use rich, multi-dimensional representations that capture the full complexity of video content. Retrofitting this onto an existing data model typically means running AI analysis but only storing a fraction of the results.

Processing pipeline gaps: AI-native platforms are built around continuous processing pipelines that analyze, index, and enrich content in real time. Legacy platforms that add AI processing as an afterthought often rely on batch processing, creating delays between upload and full AI enrichment.

User experience disconnect: When AI is an add-on, the user interface typically reflects the original non-AI design with AI features tucked into secondary menus or separate views. In an AI-native platform, every interaction, search, browse, organize, share, is designed around AI capabilities from the start.

What to Look for in an AI-Native Video DAM

If your organization is evaluating video management platforms, here are the capabilities that distinguish a truly AI-native solution:

- Automatic transcription and translation in dozens of languages, available immediately after upload.

- Semantic search that understands natural language queries and returns timestamped results within videos.

- Visual content recognition that identifies objects, scenes, actions, and on-screen text.

- Speaker identification that tracks who says what across your entire video library.

- AI-generated summaries and chapters that make long videos navigable without watching them end to end.

- Smart organization that automatically categorizes and relates content based on meaning, not just manually assigned tags.

- Built-in collaboration tools that let teams review, comment, and approve video content with time-coded precision.

- API-first architecture that allows integration with existing tools and workflows.

- Enterprise security and compliance, including role-based access control and data residency options.

WIKIO AI was built from the ground up as an AI-native video collaboration platform. Every feature, from upload to delivery, is designed around the principle that AI should handle the tedious work of understanding, organizing, and enriching video content so that humans can focus on the creative and strategic decisions that matter.

The Competitive Advantage of Finding What You Have

Here is a statistic that should concern any organization with a large video library: studies consistently show that 60 to 80 percent of existing video content is never reused because people cannot find it. They reshoot, re-record, and re-edit content that already exists somewhere in the archive.

An AI-native video DAM eliminates this waste. When every second of every video is searchable, and when the system understands meaning rather than just matching keywords, the entire library becomes an active, accessible resource. Content created last year surfaces when it becomes relevant today. B-roll shot for one project is discoverable for another. Training content in one language becomes available in twenty.

The organizations that treat video as a findable, reusable, living asset, rather than files dropped into folders and forgotten, gain a compounding advantage over those that do not. An AI-native video DAM is the infrastructure that makes this possible.